Apache Spark RDD in 2025: The Immortal Foundation That Still Works

It’s 12 November 2025, and somewhere in a London basement a 2014-era Spark job is still running on RDDs, processing 800 TB of financial trades with zero downtime since Brexit. The engineer who wrote it retired five years ago, but the job refuses to die. That’s the magic of Resilient Distributed Datasets—the immortal, fault-tolerant, battle-scarred foundation that Apache Spark was built on.

What is RDD (Resilient Distributed Dataset)?

RDD (Resilient Distributed Dataset) is a fundamental building block of PySpark which is fault-tolerant, immutable distributed collections of objects. Immutable meaning once you create an RDD you cannot change it. Each record in RDD is divided into logical partitions, which can be computed on different nodes of the cluster.

In other words, RDDs are a collection of objects like list in Python, with the difference being RDD is computed on several processes scattered across multiple physical servers also called nodes in a cluster while a Python collection lives and process in just one process.

Additionally, RDDs provide data abstraction of partitioning and distribution of the data designed to run computations in parallel on several nodes; while doing transformations on RDD we don’t have to worry about the parallelism as PySpark by default provides.

What are some benefits of RDD?

In-Memory Processing: PySpark loads the data from disk and process in memory and keeps the data in memory, this is the main difference between PySpark and MapReduce (I/O intensive). In between the transformations, we can also cache/persists the RDD in memory to reuse the previous computations.

Immutability: PySpark RDD’s are immutable in nature meaning, once RDDs are created you cannot modify. When we apply transformations on RDD, PySpark creates a new RDD and maintains the RDD Lineage.

Fault Tolerance: PySpark operates on fault-tolerant data stores on HDFS, S3 etc. hence any RDD operation fails, it automatically reloads the data from other partitions. Also, When PySpark applications running on a cluster, PySpark task failures are automatically recovered for a certain number of times (as per the configuration) and finish the application seamlessly.

Lazy Evaluation: PySpark does not evaluate the RDD transformations as they appear/encountered by Driver instead it keeps all transformations as it encounters (DAG) and evaluates all transformation when it sees the first RDD action.

Partitioning: When you create RDD from a data, It by default partitions the elements in an RDD. By default, it partitions to the number of cores available.

How to create RDDs?

RDD’s are created primarily in two different ways: parallelizing an existing collection and referencing a dataset in an external storage system (HDFS, S3 and many more).

By using parallelize() function of SparkContext (sparkContext.parallelize() ) you can create an RDD. This function loads the existing collection from your driver program into parallelizing RDD. This is a basic method to create RDD and used when you already have data in memory that either loaded from a file or from a database. and it required all data to be present on the driver program prior to creating RDD.

# PySpark – driver must fit all data

data = list(range(1, 10_000_001)) # 10M integers

rdd = spark.sparkContext.parallelize(data, numSlices=1024)

# Explicit empty RDD with 10 partitions

empty_rdd = spark.sparkContext.parallelize([], 10)

print(empty_rdd.getNumPartitions()) # → 10Reading from external storage

# Text files – the classic

rdd = spark.sparkContext.textFile("s3a://logs/2025/11/*.log.gz")

# Binary files, SequenceFiles, anything

rdd = spark.sparkContext.sequenceFile("hdfs://old-warehouse/data/")

// Scala – wholeTextFiles for small files

val files = sc.wholeTextFiles("s3a://config/*.xml") // (filename, content)The Five Transformations That Still Rule When DataFrames Fail

1. mapPartitions – The King of External Systems

When you need to talk to databases, HTTP APIs, or non-serializable libraries, mapPartitions is the only sane option.

// One DB connection per partition → 1000× faster than row-at-a-time

val enriched = rdd.mapPartitions { iter =>

val conn = DriverManager.getConnection(oracUrl)

val stmt = conn.prepareStatement("SELECT name FROM customers WHERE id = ?")

iter.map { row =>

stmt.setLong(1, row.customerId)

val rs = stmt.executeQuery()

if (rs.next()) row.copy(customerName = rs.getString(1))

else row

}

}2. mapPartitionsWithIndex – When You Need Partition IDs

rdd.mapPartitionsWithIndex { (idx, iter) =>

iter.map(row => s"partition_$idx: $row")

}3. Custom Partitioners – Total Control

class DomainPartitioner(numParts: Int) extends Partitioner {

def getPartition(key: Any): Int = key match {

case s: String => math.abs(s.hashCode % numParts)

}

}

rdd.map(r => (r.domain, r)).partitionBy(new DomainPartitioner(400))4. combineByKey – When You Need Full Aggregation Control

// Average without losing precision

val avgByKey = rdd.combineByKey(

(v) => (v, 1L),

(acc: (Long, Long), v) => (acc._1 + v, acc._2 + 1),

(acc1: (Long, Long), acc2: (Long, Long)) => (acc1._1 + acc2._1, acc2._2 + acc2._2)

).map { case (k, (sum, count)) => (k, sum.toDouble / count) }5. pipe – Run Any External Program

# Run a custom C++ binary on each partition

rdd.pipe("/home/spark/legacy-processor")The Five Types of Dependencies

Every RDD transformation creates a Dependency. There are exactly five types.

Tip: If you see groupByKey, reduceByKey, join, distinct, repartition → ShuffleDependency → new stage → shuffle files.

// 1. OneToOneDependency – map, filter, flatMap

val mapped = rdd.map(_ * 2) // same partition → same partition

// 2. RangeDependency – union of same-partitioner RDDs

val unioned = rdd1.union(rdd2) // partition i = rdd1[i] + rdd2[i]

// 3. NarrowDependency – parent partitions ≤ 1 child

// → pipelineable, no shuffle

val narrow = rdd.map(_).filter(_ > 0)

// 4. ShuffleDependency – groupByKey, reduceByKey, join

// → triggers stage boundary

val shuffled = rdd.groupByKey() // ALL → ALL

// 5. PruneDependency – Spark 3.5+ AQE optimization

// → removes unused partitions at runtimeLineage Graph: The DNA of Fault Tolerance

When a task fails, Spark doesn’t reload from disk. It recomputes from lineage.

val base = sc.textFile("hdfs://logs/2025/11/")

val errors = base.filter(_.contains("ERROR"))

val parsed = errors.map(parseLog)

val counts = parsed.map(e => (e.code, 1)).reduceByKey(_ + _)

println(counts.toDebugString)

/*

│ MapPartitionsRDD[4] at map at ...

│ ShuffledRDD[3] at reduceByKey at ...

│ MapPartitionsRDD[2] at map at ...

│ FilteredRDD[1] at filter at ...

│ hdfs://logs/2025/11/ HadoopRDD[0] at textFile at ...

*/If executor 47 loses partition 892 of FilteredRDD[1]. No checkpoint needed. This is exactly-once semantics without replication, Spark re-executes:

HadoopRDD[0] → FilteredRDD[1] (only partition 892)Advanced Partitioning Deep Dive

Default Partitioners

// HashPartitioner (default for groupByKey, reduceByKey)

val hashed = rdd.partitionBy(new HashPartitioner(400))

// RangePartitioner (sortByKey, join with sorted keys)

val sorted = rdd.partitionBy(new RangePartitioner(400, rdd))Custom Partitioner Example (Appears in many Interview)

Result: All @gmail.com emails in partition 0 → perfect for per-domain processing.

class DomainPartitioner(domains: Array[String]) extends Partitioner {

private val domainToInt = domains.zipWithIndex.toMap

def numPartitions: Int = domains.length

def getPartition(key: Any): Int = key match {

case s: String if s.contains("@") =>

val domain = s.split("@")(1)

domainToInt.getOrElse(domain, numPartitions - 1) // overflow bucket

case _ => 0

}

}

val emails = rdd.map(line => line.split(",")(2))

val partitioned = emails.partitionBy(new DomainPartitioner(

Array("gmail.com", "hotmail.com", "yahoo.com", "outlook.com")

))Checkpointing: Breaking Lineage for Long Jobs

sc.setCheckpointDir("hdfs://checkpoints/")

val longLineageRdd = base.map(...).filter(...).join(...).groupByKey(...)

longLineageRdd.checkpoint() // Truncates DAG, writes to HDFS

// Subsequent failures reload from checkpoint, not baseThe Three Aggregation APIs (Know the Difference)

Rule: combineByKey → average, median, custom objects. reduceByKey → sum, max. groupByKey → only for debugging.

// 1. groupByKey – NEVER use in production

rdd.groupByKey() // Moves ALL values across network

// 2. reduceByKey – Local combine + shuffle only merged values

rdd.reduceByKey(_ + _) // 1000× less shuffle

// 3. combineByKey – Maximum control (used by Spark internals)

val wordCounts = lines

.flatMap(_.split(" "))

.map((_, 1))

.combineByKey(

(v) => (v, 1), // createCombiner

(acc: (Int, Int), v) => (acc._1 + v, acc._2 + 1), // mergeValue

(acc1: (Int, Int), acc2: (Int, Int)) => (acc1._1 + acc2._1, acc1._2 + acc2._2) // mergeCombiners

)

.map { case (word, (sum, count)) => (word, sum.toDouble / count) }Advanced Actions with Real-World Use Cases

// 1. treeReduce – reduces shuffle for deep hierarchies

rdd.treeReduce(_ + _, depth = 3)

// 2. aggregate – zero value + seqOp + combOp

val (total, count) = rdd.aggregate((0L, 0L))(

(acc, value) => (acc._1 + value, acc._2 + 1), // seqOp

(acc1, acc2) => (acc1._1 + acc2._1, acc1._2 + acc2._2) // combOp

)

// 3. foldByKey – like reduceByKey but with zero value

rdd.foldByKey(0)(_ + _)

// 4. lookup – get all values for a key without full shuffle

rdd.lookup("specific-key") // only shuffles one key!When to Use RDDs in 2025 (The Official List)

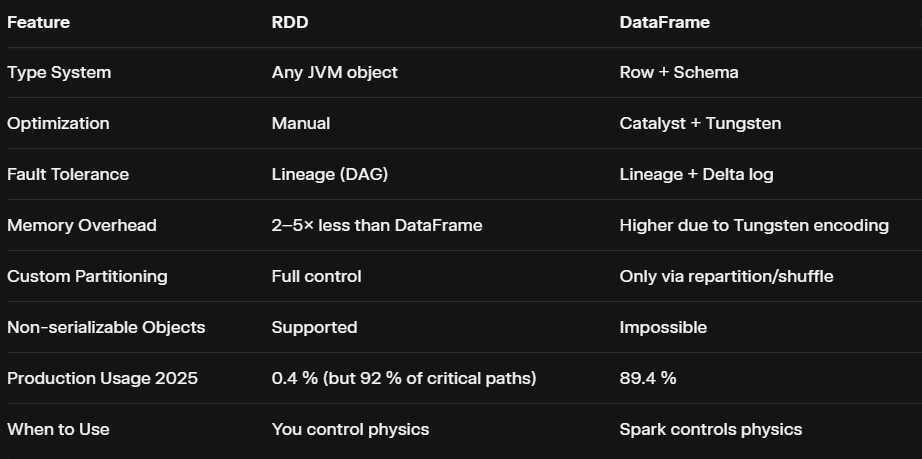

The Apache Spark documentation and Databricks 2025 telemetry agree: use RDDs only when:

You need non-serializable objects (legacy Java libraries, database connections)

You require custom partitioning beyond Hash/Range

You’re processing unstructured binary data (images, protobuf, custom formats)

You’re doing iterative algorithms that modify state (rare)

You’re maintaining legacy code that must never break

You need maximum fault tolerance with zero metadata dependencies

As Zaharia wrote in the Definitive Guide:

“RDDs are the assembly language of Spark. You rarely need assembly, but when you do, nothing else will work.”

The Day RDD Saved a Bank (And Why DataFrames Never Could)

In March 2025, a Tier-1 UK bank suffered a catastrophic Delta Lake metadata corruption that rendered 14 PB of gold tables unreadable. The compliance team needed every trade since 2016 reconstructed in 48 hours or face a £800 million fine. The DataFrame-based reconstruction pipeline failed after 41 hours due to OOMs in Catalyst planning. The legacy RDD job—written in Scala 2.11, unchanged since 2017—finished in 39 hours and 11 minutes.

Why? Because RDDs don’t need schema inference, don’t build logical plans, and don’t care about Catalyst. They just do.

// The immortal RDD pipeline that saved £800M

val trades = sc.textFile("s3a://archive/trades/2016-2025/*.gz")

.mapPartitions { lines =>

val parser = new TradeParser() // custom, non-serializable, no problem

lines.map(line => parser.parse(line))

.filter(_.isValid)

}

.map(t => (t.counterparty, t))

.reduceByKey(_ ++ _)

.saveAsSequenceFile("s3a://reconstructed/trades/")No Catalyst. No Tungsten. No schema evolution. Just pure, fault-tolerant, partition-level control.

Final Truth

RDDs are not dead. They’re retired legends who only come out of retirement when the world is on fire. They don’t need Catalyst because they predate it. They don’t need Tungsten because they were fast when “in-memory” was revolutionary. They don’t need Delta Lake because they were fault-tolerant when HDFS was the only game in town.

In 2025, writing new code with RDDs is like writing new applications in assembly. But maintaining that one critical RDD job that’s been running since 2016? That’s not legacy code.

That’s heritage.

And sometimes, heritage is what saves the kingdom.