Real-Time Data Magic: Mastering Stream Processing with Apache Spark Structured Streaming

In a world where data never sleeps — think stock trades flashing by the millisecond, sensors on millions of IoT devices churning out readings, or social media feeds exploding during a live event — traditional batch jobs that run hourly or daily simply can’t keep up. Stream processing is the superpower that lets us analyze and act on data the instant it arrives, turning an endless firehose of information into immediate insights and decisions. Apache Spark’s Structured Streaming, introduced in Spark 2.0 and polished ever since, brings the same easy-to-use, high-level APIs we love from batch processing (DataFrames and Datasets) into the wild world of continuous, real-time computation. The result? You can write streaming queries that look almost identical to batch queries, while Spark handles the hard parts — fault tolerance, exactly-once guarantees, and massive scale — behind the scenes.

What is Stream Processing?

At its heart, stream processing treats data as an unbounded, ever-growing table that keeps getting new rows appended to it. Instead of loading a fixed file or database table, your input source (Kafka topics, cloud storage folders, TCP sockets, etc.) is modeled as a table that never ends. Every time fresh data lands, Spark appends it as new rows. Your query — whether it’s a simple filter, a windowed aggregation, or a complex join — then runs continuously against this living table and pushes results out to sinks like databases, dashboards, Kafka, or even another streaming query.

Structured Streaming gives you three ways to emit results as the stream flows:

Complete mode: outputs the entire result table every time it changes (great for small-state queries like global counters).

Append mode: only outputs new, final rows that will never be updated (perfect for pure “new events only” use cases like alerts).

Update mode: outputs only the rows that changed since the last trigger (the most flexible and commonly used in real life).

One of the most elegant ideas in Structured Streaming is the concept of event-time processing. Real-world data is messy — packets arrive out of order, devices send logs minutes late, networks hiccup. Instead of processing records in the order they show up at the engine (processing time), Structured Streaming lets you process them according to the timestamp inside the data itself (event time). This makes windowed operations — “sales in the last 15 minutes” or “average temperature per hour” — actually meaningful even when data is delayed or shuffled.

Watermarking is the clever mechanism that saves us from infinite memory growth. You tell Spark, “no data will ever be more than, say, 10 minutes late.” Once the watermark passes a certain event-time point, Spark knows it’s safe to discard old aggregation state and move forward. Without watermarking, a single late record could force the system to keep state forever.

Under the hood, Structured Streaming is just an incrementally executing Spark batch job that runs every few seconds (or milliseconds) on the newest micro-batch of data. But because everything is built on the Catalyst optimizer and Tungsten execution engine, you get the same performance tricks and code generation you enjoy in regular Spark SQL. And when you need sub-second latency, you can flip into Continuous Processing mode (experimental in earlier versions, stable now), which turns the engine into a true continuous operator model instead of micro-batches.

In short, Structured Streaming lets you think in simple SQL/DataFrame terms while Spark quietly solves the notoriously hard problems of distributed streaming: exactly-once semantics, state management, late data, and fault recovery. Whether you’re building live dashboards, fraud detectors, or real-time ETL pipelines, it’s the reason many teams say “just use Spark” instead of reaching for half a dozen specialized streaming systems.

Where is stream processing useful? (Use cases)

When people ask why they should bother with streaming instead of just running bigger batch jobs more often, the answer almost always comes down to one (or more) of these practical scenarios that show up again and again in production.

Live dashboards that actually feel live Forget refreshing a BI report and seeing “as of 2 a.m.” Every modern company now expects dashboards that update within seconds. Engineering teams watch error rates and latency spikes, product managers track feature adoption the minute a new button goes out, and business teams monitor revenue or active users in real time. Structured Streaming is often the engine quietly keeping those numbers fresh.

Getting data into the lake or warehouse seconds after it’s born The classic “batch ETL, but faster” pattern remains the #1 driver for most Structured Streaming pipelines. Raw JSON from Kafka or cloud storage lands → gets parsed, cleaned, enriched, and written as Delta/Parquet tables almost instantly. Analysts and data scientists no longer wait hours for new data; they query tables that are only seconds behind reality. Exactly-once guarantees and fault tolerance are non-negotiable here — duplicate or missing rows break trust downstream.

Powering customer-facing metrics and leaderboards Think GitHub’s streak counter, Spotify’s “listeners right now,” or any gaming leaderboard. A streaming job continuously updates Redis, a database, or a materialized view so the number users see on the website or app is effectively current. Even a 30-second delay feels ancient to end users these days.

Instant alerts and human-in-the-loop workflows Security teams get paged the moment an account shows impossible travel velocity. Logistics platforms text warehouse workers the second a same-day-delivery order comes in. Trading desks flash an alert when a stock hits a threshold. These are usually simple pattern matches or threshold checks, but they have to trigger reliably and fast.

Real-time fraud, risk, and abuse prevention Banks decline sketchy credit-card transactions, ride-sharing apps block riders with suspicious patterns, and content platforms kill spam bots — all while the event is still happening. These jobs typically keep a small rolling window of per-user history in state and score each new event the moment it arrives.

Dynamic pricing and bidding Airlines, hotels, e-commerce sites, and ad exchanges recalculate prices or bids on the fly based on demand signals that are streaming in. A few seconds of extra latency can literally cost millions.

Continuously evolving recommendations and search ranking Netflix, YouTube, Amazon, and virtually every large consumer app now blend historical signals with what the user (and everyone else) is doing right this second. Structured Streaming jobs keep the “trending” or “freshness” features up to date and feed them into the serving layer.

Online learning loops (the holy grail) The most ambitious teams don’t just detect fraud with fixed rules — they retrain the model every few minutes using the latest transactions from millions of customers. Search and recommendation teams do the same: ingest clicks and views, update embeddings or ranking models, and push the new parameters back into production without anyone clicking “deploy.”

Far from being exotic edge cases, these patterns represent what thousands of companies actually run on Structured Streaming today. Most start with one or two of the simpler ones (dashboards + low-latency ETL) and gradually layer on the more sophisticated capabilities as the business value becomes obvious.

Why Streaming Wins? (and Where It Hurts)

Batch processing is honestly easier: you load a finite dataset, run a job, debug with a notebook, and you’re done. It’s also brutally efficient when you can throw thousands of cores at a fixed pile of data. So why do companies still invest heavily in streaming? Two big reasons usually tip the scale.

Latency that actually matters When the business requirement is “react in seconds (or milliseconds), not hours,” batch simply can’t compete. Fraud detection, intrusion alerts, dynamic pricing, ride matching, ad bidding — none of these can wait for the next scheduled job. Keeping state in memory and processing each record as it lands is the only way to hit single-digit-second (or sub-second) response times.

Automatic incremental computation Imagine you want rolling 24-hour web-traffic stats updated every 5 minutes. A naïve batch approach re-scans the full 24 hours of data seventy-two times a day — a huge waste. A proper streaming system remembers yesterday’s aggregates and only folds in the new 5 minutes of data. You get the same answer with a fraction of the compute. In batch world you’d have to hand-code checkpointing, state management, and incremental logic yourself. Streaming gives you that efficiency for free.

The Price You Pay: Real-World Streaming Challenges

Streaming sounds great on paper, but anyone who has run it in production will tell you it comes with a long list of sharp edges. Here are the headaches that keep streaming engineers up at night:

Out-of-order and late data – Events don’t arrive in timestamp order. A reading from 10 minutes ago can show up after you’ve already processed “now.” If your logic depends on true event-time sequencing (almost everything non-trivial does), you have to buffer, sort in memory, and eventually decide when it’s safe to move forward.

State can explode – Per-user session windows, rolling aggregates, fraud models that remember the last 1,000 actions per card — state adds up fast. Millions of keys × kilobytes each quickly becomes terabytes of RAM that must survive failures.

Exactly-once semantics despite crashes – You can’t lose data and can’t process it twice, even when nodes die, networks partition, or you’re deploying a code update.

Load spikes and stragglers – One slow partition or back-pressured sink can stall the entire pipeline, even if 99% of the data is flowing fine.

Low-latency requirements – Users now expect dashboards to refresh in <5 seconds and alerts in <1 second. That puts enormous pressure on every component.

Transactional output – You can’t write half an aggregated window to a dashboard or data warehouse and let users see inconsistent numbers. Sinks have to support idempotent or transactional writes.

Joining streams with slow-changing reference data – Enriching clicks with user profiles, product catalog changes, or geo-IP tables that live in PostgreSQL or S3.

Live code upgrades – Business rules change constantly. How do you push a new fraud model or pricing formula without dropping or duplicating events?

Knowing when a result is “final” – With unbounded data, how long do you wait for possible late events before you declare a window closed and emit the total?

Every major streaming system — Structured Streaming, Flink, Kafka Streams, etc. — is basically exists to hide (or at least soften) these problems behind a clean API. The fact that Spark makes most of them feel almost invisible is a big reason it has become the default choice for so many teams. But make no mistake: under the hood, Spark is still solving every single one of these hard problems for you.

How Streaming Systems Are Built: The Big Design Choices

Over the years, the streaming world has settled on a few fundamental architectural decisions. Every modern system (Structured Streaming, Flink, Kafka Streams, Dataflow, etc.) picks a side on each of these axes. Understanding them helps you see why Spark made the choices it did — and why those choices work so well for most real-world workloads.

1. Low-level “record-at-a-time” vs declarative APIs

Old-school systems like Storm or Samza give you an event, a callback, and a toolbox of queues. You’re in full control… and fully responsible for everything: state management, duplication handling, checkpointing, failure recovery, exactly-once semantics. It’s powerful when you need every last microsecond or a custom topology, but it’s also brutally hard to get right and nearly impossible to maintain as the team grows.

Newer systems go declarative: you say what result you want (a SQL query, a DataFrame transformation, a beam pipeline), and the engine figures out how to incrementalize it, store state safely, survive crashes, and optimize the plan. Spark started this trend with DStreams (functional style) and took it all the way with Structured Streaming, where you literally write batch-style DataFrame code and Spark turns it into a fault-tolerant streaming job automatically. Less code, fewer bugs, happier engineers.

2. Processing time vs event time

Processing-time semantics are simple: “do something the moment the record hits my cluster.” Great when everything happens in one datacenter and clocks are perfectly in sync. Useless the moment you have mobile devices, third-party webhooks, or even multi-region replication — data shows up late and out of order all the time.

Event-time semantics let you reason about “what actually happened when,” no matter when the message arrives. Windowed counts, sessionization, and pattern detection suddenly become correct instead of “best effort.” The price is that the engine now has to buffer state, handle late data gracefully, and let you define watermarks (“no event will ever be more than X late”). Structured Streaming bakes native event-time support into every operation — you don’t have to think about it until you need it.

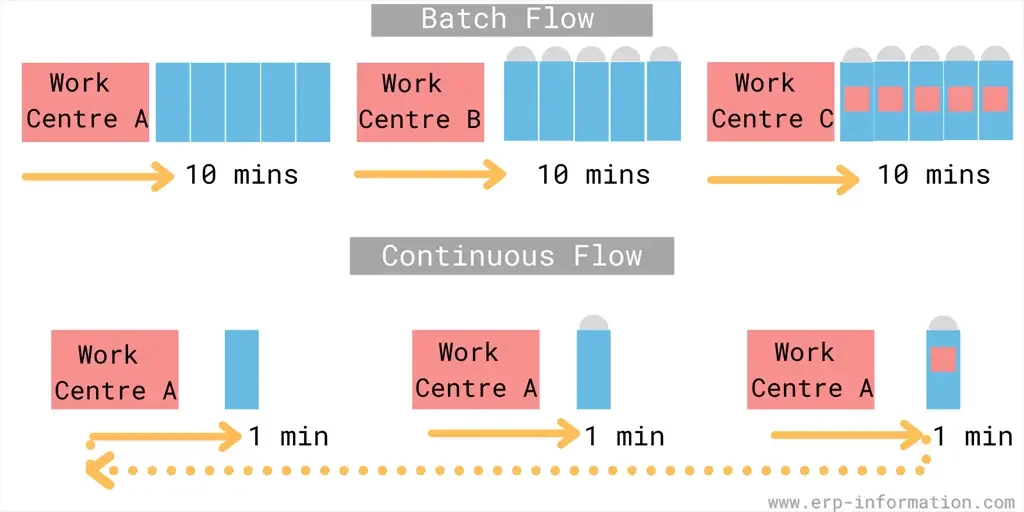

3. Continuous processing vs micro-batch execution

Continuous (sometimes called “native” or “true” streaming): Each operator is a long-running process that pushes records downstream the instant they arrive. Think Flink’s default mode or Kafka Streams. Pros: can hit single-millisecond latency when load is moderate. Cons: per-record overhead is high, fixed topology makes rebalancing painful, and throughput per node is usually lower.

Micro-batch: Wait a tiny bit (100 ms – 1 s), collect whatever arrived, then run a tiny batch job on that slice using the full power of the batch engine (vectorized execution, code-gen, dynamic allocation, etc.). This is the classic Spark Structured Streaming model. Pros: insane throughput, tiny operational cost, easy elasticity, same optimizations as your batch jobs. Cons: you pay the micro-batch interval as minimum latency (usually 100 ms–1 s is totally fine for 95% of use cases).

Spark now actually offers both: the battle-tested micro-batch engine for the majority of workloads, and an experimental (now production-ready in newer releases) Continuous Processing mode when you truly need sub-100 ms end-to-end latency under the exact same API.

In practice, most companies discover that 100–500 ms latency is more than good enough, and they’d rather spend 5× fewer nodes and far less operational headache. That’s why micro-batch + declarative + event-time became the winning combo for Structured Streaming — and why it’s quietly eating huge chunks of the streaming market.

Spark’s Two Streaming APIs (and Why Only One Matters Now)

Spark actually ships with two streaming stories — one from the past, one that defines the future.

DStreams (the original Spark Streaming)

Launched in 2012, DStreams brought declarative streaming to the masses before most people even knew they needed it. You wrote functional transformations (map, reduceByKey, window, etc.) on a stream of RDDs, and Spark chopped the stream into tiny micro-batches behind the scenes. It delivered rock-solid exactly-once guarantees with almost zero extra code, scaled to thousands of nodes, and let you mix streaming and batch RDD code freely. For years it dominated surveys and production deployments — and plenty of companies still run it happily today.

But time moved on. DStreams is built on raw Java/Python objects and RDDs, so it misses all the Catalyst optimizer and Tungsten goodies. It has zero native event-time support (you had to roll your own bucketing and sorting), and the micro-batch interval leaks into the API everywhere. It works, but it feels old.

Structured Streaming (the present and future)

Introduced in Spark 2.0 and dramatically improved ever since, Structured Streaming is built directly on the DataFrame/Dataset engine — the same one you use for batch SQL. You literally write normal DataFrame code (or plain SQL) and tell Spark “run this continuously on streaming data.” That’s it.

What you get in exchange:

Full Catalyst optimization and code generation → much faster execution

Native event-time processing and watermarks baked into every window, aggregation, and join

Automatic state management, checkpointing, and exactly-once output

The same code works for batch and streaming — no more maintaining two versions

Output modes (append, update, complete) that make sinks behave correctly

Seamless integration with Delta Lake, Parquet, Kafka, databases, dashboards, etc.

Ability to query streaming state with regular Spark SQL or interactive notebooks

(Since Spark 3.0+) a production-ready Continuous Processing mode for sub-100 ms latency when you truly need it

The vision is simple but powerful: one unified Spark platform for batch, streaming, and interactive workloads. No separate teams, no divergent codebases, no learning three different APIs.

Conclusion: Why Structured Streaming Is the Right Bet Today

Real-time data isn’t a niche anymore — it’s table stakes. Every dashboard wants to be live, every data warehouse wants fresh tables, every product feature wants to react instantly. At the same time, engineering teams are tired of running a zoo of specialized streaming systems that each solve one problem brilliantly and everything else poorly.

Structured Streaming delivers the rare combination very few systems have ever achieved:

An API so simple you write batch-style code and get a correct, fault-tolerant stream

Performance and cost-efficiency that come from riding the same optimized engine that already processes petabytes in batch

Native event-time, watermarks, and state handling that make late and out-of-order data stop being scary

Deep integration with the rest of the Spark ecosystem (Delta Lake, MLlib, notebooks, SQL warehouses)

Start with a 100-millisecond micro-batch for your low-latency ETL or live dashboard. Add event-time windows and watermarks when reality gets messy. Switch on Continuous Processing if you ever need single-millisecond alerts. And tomorrow, when marketing wants to retrain the recommendation model every five minutes, you’ll do it with the exact same DataFrame you already wrote.

That unification — one engine, one API, one set of skills — is the real killer feature. The rest of the industry is still catching up.

So if you’re building anything real-time on Spark today, there’s no debate: Structured Streaming is the only API you should be writing. It’s faster, simpler, more correct, and future-proof. Everything else is legacy.